Neural Networks

Origins

- Neural networks (NNs) are algorithms designed to mimic the brain.

- Popular in the 1980s and early 1990s, but popularity waned in the late 1990s.

- Recent resurgence as a state-of-the-art technique in various applications.

- Artificial neural networks are far simpler than the brain’s structure.

The Brain

- Composition: Networks of neurons.

- Function: Brain activity occurs due to neuron firing.

- Neurons:

- Connect through synapses, propagating action potentials (electrical impulses).

- Synapses release neurotransmitters, which can be:

- Excitatory: Increase potential.

- Inhibitory: Decrease potential.

- Learning: Synapses exhibit plasticity, enabling long-term changes in connection strength.

- Scale:

- ~10¹¹ neurons.

- ~10¹⁴ synapses.

Neural Networks and the Brain

- Neural networks consist of computational models of neurons called perceptrons.

The Perceptron

- A threshold unit:

- Fires if the weighted sum of inputs exceeds a threshold.

- Analogous to a threshold gate in Boolean circuits.

graph TD Input1 -->|Weight1| Perceptron Input2 -->|Weight2| Perceptron Perceptron --> Output

Soft Perceptron (Logistic)

- Replaces threshold with a sigmoid activation function.

- A “squashing” function that produces a continuous output.

Structure of Neural Networks

- Composed of nodes (units) connected by links.

- Each link has a weight and activation level.

- Each node has:

- Input function: Summing weighted inputs.

- Activation function: Transforms the input value.

- Output.

Multi-Layer Perceptron

- Feed-Forward Process:

- Input layer units are activated by external stimuli (e.g., sensors).

- The input function computes input values by summing weighted activations.

- Activation function applies a non-linear transformation (e.g., sigmoid).

- Inputs: Real or Boolean.

- Outputs: Real or Boolean; can have multiple outputs per input.

graph LR Input1 --> Hidden1 Input2 --> Hidden1 Hidden1 --> Output Hidden1 --> Hidden2 Hidden2 --> Output

Summary

- Neural networks are inspired by biological neurons but are far less complex.

- Their structure and functionality involve input, activation, and output, mimicking a simplified version of brain processes.

- Feed-forward networks like multi-layer perceptrons are foundational to many applications.

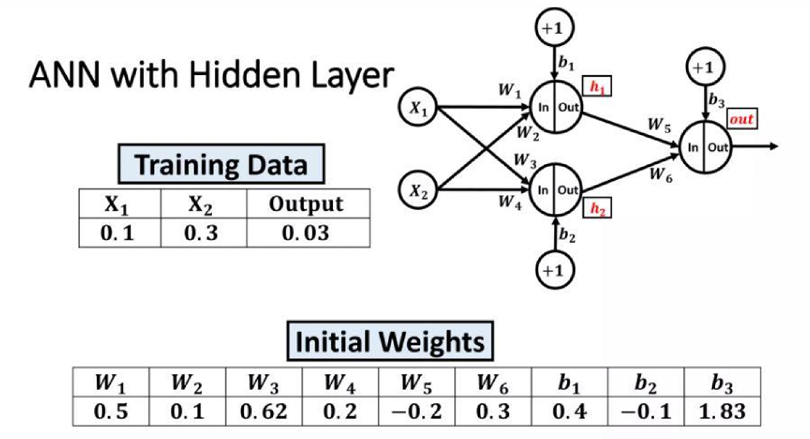

Slides Ex.2

Error Function:

1. Gradients for Output Layer Weights ():

Let’s first compute the error gradient for :

Step 1: Compute :

Step 2: Derivative of output activation:

For sigmoid:

\large out = f(z) = \frac{1}{1 + e^{-z}}$$$$\large f'(z) = out(1 - out)

Step 3: Chain rule application:

For :

Now substituting values:

Similarly, for :

2. Gradients for Hidden Layer Weights ():

For (connected to ):

We need to backpropagate through :

Breaking it down:

- From earlier:

- From hidden layer :

- Weight contribution:

Combining:

Similarly, for :

3. Final Gradient Formulas:

- Output Layer:

\large \frac{\partial E}{\partial W_5} = -(y - out)(out)(1 - out)(h_1)$$$$\large \frac{\partial E}{\partial W_6} = -(y - out)(out)(1 - out)(h_2)

- Hidden Layer:

\large \frac{\partial E}{\partial W_1} = -(y - out)(out)(1 - out)(W_5)(h_1)(1 - h_1)(X_1)$$$$\large \frac{\partial E}{\partial W_2} = -(y - out)(out)(1 - out)(W_5)(h_1)(1 - h_1)(X_2)$$$$\large \frac{\partial E}{\partial W_3} = -(y - out)(out)(1 - out)(W_6)(h_2)(1 - h_2)(X_1)$$$$\large \frac{\partial E}{\partial W_4} = -(y - out)(out)(1 - out)(W_6)(h_2)(1 - h_2)(X_2)